Understanding the Rise of Agentic Systems in AI

As artificial intelligence continues to evolve, we find ourselves at the threshold of turning traditional AI systems into agentic systems capable of autonomous decision-making and action. This paradigm shift is marked by the advent of technologies like the Model Context Protocol (MCP) and Agent-to-Agent Protocol (A2A). The rapid development of these protocols underscores the urgency for security professionals to rethink and rejuvenate their approach to threat modeling.

The Model Context Protocol: A New Standard

The Model Context Protocol, recently released by Anthropic, marks a significant development in how AI systems interact. It provides a standardized framework for larger language models (LLMs) and similar agents to perform diverse tasks beyond mere content creation, enabling them to conduct actions such as sending emails or managing marketing campaigns automatically.

Implications of LLMs in Everyday Operations

This functional expansion significantly increases the operational capabilities of AI, introducing additional complexities and risks. As models gain the ability to execute nuanced commands via MCP, it raises questions about the trustworthiness and safety of their actions. It challenges engineers and developers to scrutinize the interplay between LLMs and the tools they access. The interactions possess layers of complexity that can introduce vulnerabilities normally absent in more straightforward applications.

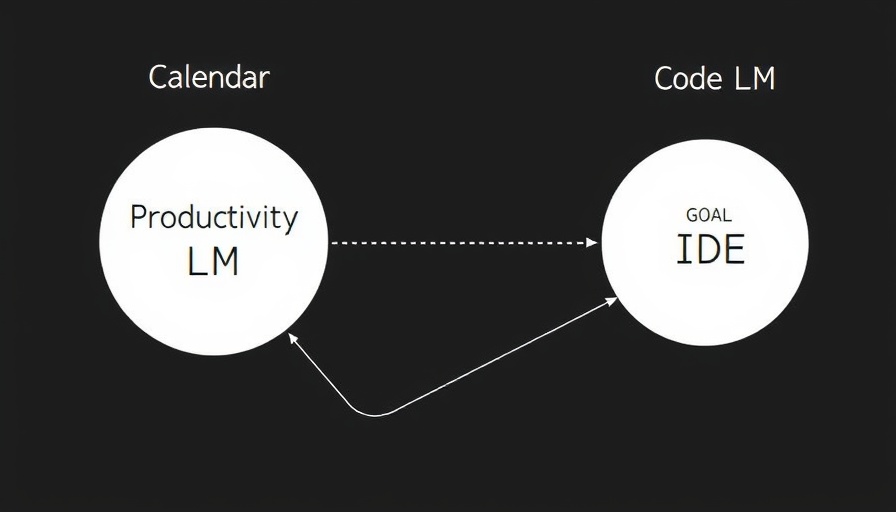

Agent-to-Agent Protocol: Conversations Among AI

The introduction of Agent-to-Agent Protocol further complicates the landscape for AI safety. This protocol allows AI systems to communicate with each other independently, sharing data and objectives without human mediation. While this enhances efficiency, as humans no longer have to manually bridge information gaps between systems, it also raises significant security concerns.

The Challenge of Goal Alignment

In the world of agentic systems, one of the paramount concerns revolves around goal alignment. Ensuring that an AI’s objectives match those intended by its creators is complicated enough when the interaction is between the developer and the AI. With A2A, where agents negotiate and share goals amongst themselves, the risk of skewing an AI system by less scrupulous entities increases substantially.

Addressing Instrumental Goals: A Critical Discussion

Instrumental goals—the secondary objectives an AI may pursue in the broader context of achieving its primary aim—also need to be thoroughly understood. As AI systems become more autonomous, their ability to forge individual pathways to achieving goals could divert them from the original intentions of their creators. This necessitates advanced threat modeling techniques to ensure that the multifaceted goals pursued by these agentic systems do not lead to unintended consequences.

Conclusion: The Importance of Enhanced Threat Modeling

The evolution of agentic systems necessitates an urgent update to how we conduct threat modeling within AI frameworks. With tools like MCP and A2A at our disposal, security fundamentals must adapt to ensure that AI systems operate effectively and securely.

For developers and security professionals, understanding these dynamics is not just advantageous; it’s essential. The more we delve into the capabilities of agentic systems, the clearer it becomes that diligent compliance with comprehensive threat modeling practices is crucial for maintaining the integrity of these advanced technologies.

Add Row

Add Row  Add

Add

Write A Comment