The Power of the Golden Section in Optimization

At the heart of optimization techniques lies the need to find the minima of a function efficiently. The Golden Section search method is a remarkable approach to achieving this task in one-dimensional functions on closed intervals. By utilizing the concept of the golden ratio (φ) to systematically narrow down the interval containing the minimum, this method guarantees convergence to an approximate minimum for unimodal functions.

Understanding the Golden Section Method

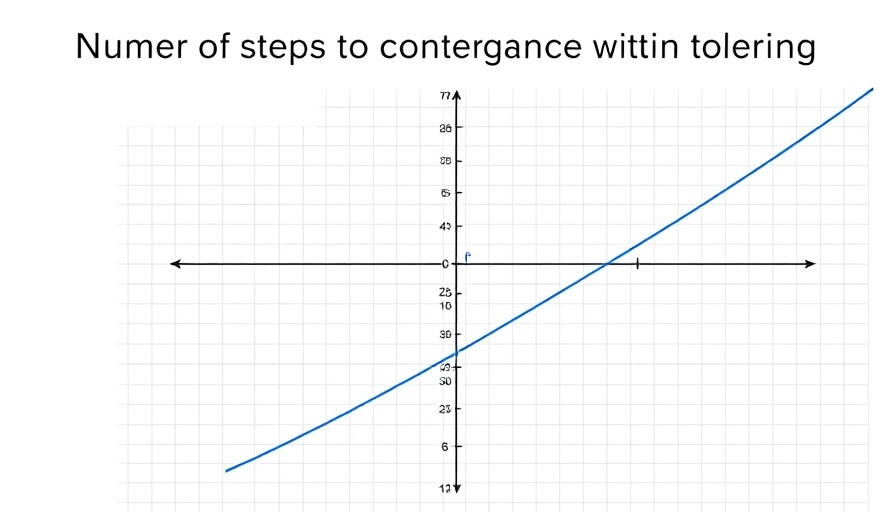

The Golden Section search operates by repeatedly reducing an interval that contains the minimum until it becomes sufficiently small. The critical aspect of this method is its geometric nature, where the reduction ratio is the golden ratio, approximately 0.618. This unique characteristic sets it apart from other minimization strategies, making it particularly effective for specific applications. In contrast to algorithms employed in AI learning paths, such as gradient descent, the Golden Section search offers a reliable alternative when the target function is unimodal.

Applications Beyond Optimization

Beyond its direct application in finding minima, the Golden Section search has utility in various optimization problems faced in artificial intelligence (AI) and other technological advancements. The method can be used in parameter tuning of AI models, where the objective function might represent the model's performance metrics. By employing the Golden Section search, developers can refine their models effectively, enhancing the overall efficiency of AI learning paths.

Challenges and Considerations in Usage

While the Golden Section search provides a structured approach to minimization, it is essential to recognize its limitations. The method is inherently constrained to unimodal functions, potentially limiting its application to complex functions that do not adhere to this property. Developers need to ensure that the conditions of unimodality are met prior to employing this method, as deviations may lead to incorrect minima identification. Furthermore, trends in optimization often point to multi-dimensional approaches, requiring practitioners to be well-versed in higher-dimensional adaptations of the Golden Section search.

The Intersection of AI and Optimization Techniques

The rise of AI technology has sparked a significant interest in optimization methods, as they are crucial for training models and improving performance. Techniques like the Golden Section search contribute to a broader understanding of optimization in AI, reinforcing the importance of foundational mathematical concepts in modern scientific endeavors. As professionals navigate their AI learning paths, knowledge of diverse optimization methods will empower them to harness the full potential of machine learning applications.

Future Trends in Optimization Methodologies

With the rapid development of AI technologies, the landscape of optimization methodology is continually evolving. Researchers are exploring hybrid approaches that combine traditional techniques like the Golden Section search with modern algorithms to enhance convergence rates and efficiency. As the tech industry continues to prioritize AI science, understanding these various optimization paths will become imperative for practitioners aiming to stay ahead in their fields.

In conclusion, the Golden Section search stands as a testament to the power of mathematical optimization in technology and AI. By integrating such methods into the broader context of learning and innovation, professionals can achieve greater success in their respective technological pursuits. For those keen on mastering their AI learning paths, this foundational knowledge serves as a stepping stone in traversing the complexities of optimization.

Ready to delve deeper into AI technologies and enhance your learning path? Stay updated on the latest advancements in the field!

Add Row

Add Row  Add

Add

Write A Comment