Understanding Parameter Estimation in Data Fitting

When it comes to making sense of data in the world of technology and analytics, understanding how to effectively fit a distribution to your data is crucial. Whether you're analyzing river height data to predict floods or evaluating product demand over time, fitting distributions can help in uncovering trends and making informed decisions. This article explores three prime methods of estimating parameters when fitting a Gumbel distribution to data, giving you the foundation to dive deeper into statistical modeling and analytics.

The Significance of the Gumbel Distribution

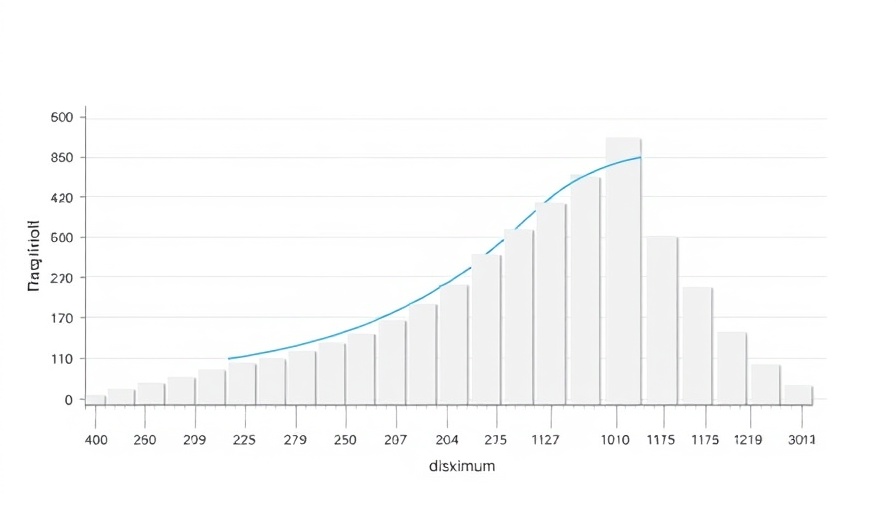

The Gumbel distribution stands out as a vital statistical tool used to model extreme events—those phenomena that don’t just occur regularly but are often pivotal in risk management, such as predicting insurance payouts after disasters. By estimating location and scale parameters, we can quantify the likelihood of significant adverse or advantageous events occurring. The Gumbel distribution is characterized by its probability density function, expressed as f(x; μ, σ) = exp(-z-exp(-z))/σ, where z = (x - μ) / σ. Understanding this framework is essential for anyone interested in AI and its applications in predictive modeling.

Methods for Parameter Estimation: The Familiar Trio

There are three reliable methods for estimating parameters: the method of moments, maximum likelihood estimation (MLE), and solving for the roots of complex functions. Using SAS software enhances efficiency and accuracy when deploying these methodologies. Each method has its unique advantages.

1. The Method of Moments (MoM)

First, we have the Method of Moments, one of the most frequently used and intuitive methods. Instead of relying on advanced calculus, MoM involves equating sample moments (like the mean and variance) to theoretical moments (parameters of the distribution). This doesn’t require sophisticated software and can often be performed manually, making it accessible to beginners.

2. Maximum Likelihood Estimation (MLE)

The second method is Maximum Likelihood Estimation (MLE), where we optimize the parameters to make the observed data most probable. SAS’s PROC UNIVARIATE excels in this arena by swiftly calculating MLE for distributions, including our focal Gumbel distribution. This technique is often preferred in professional analytics due to its accuracy and efficiency, especially useful when working with large datasets or more complex distributions.

3. Solving for Roots of Functions

The third method involves solving for the roots of equations derived from statistical functions. While this can introduce computational challenges, it becomes a powerful tool for more thorough statistical analysis. It allows for adjustments in more complicated scenarios where the aforementioned methods may not suffice. Knowledge in programming and mathematics is vital to leveraging this approach effectively.

Real-World Applications and Future Predictions

The practical applications of fitting distributions are vast and extend well into the realms of AI and machine learning. Those interested in AI learning paths can take inspiration from these model fitting techniques. As industries continue to embrace big data, understanding the implications of fitted models will define competitive advantage.

Consider predictive analytics in financial scenarios—parameters derived from fitting distributions can help mitigate risks through better forecasting methods. As AI and machine learning grow more sophisticated, their integration with statistical techniques like these will present new opportunities for innovation and growth in various sectors.

Challenges and Counterarguments in Parameter Estimation

Despite the advantages of fitting distributions, challenges remain. Accuracy depends on the quality of data input—erroneous data can lead to misleading results. Critics of the maximum likelihood method, for instance, argue that it can be mathematically intense and sensitive to sample size. Audience members should weigh insights against their analytical capabilities and resources.

Tools and Resources for Learning and Implementation

The modern landscape of technology offers various tools and resources for budding analysts. Learning platforms focused on AI science can provide real-world case studies and hands-on experiences with data fitting. To foster a better understanding, tools like SAS, Python, and R can enhance practical learning—allowing users to implement these methodologies in their projects.

Conclusion: The Path Forward in Analytics

Equipped with the methods to estimate parameters when fitting distributions to data, you stand at the forefront of analytical capabilities. By delving deeper into statistics and machine learning, you're poised to unlock new levels of insight that can drive your projects—and indeed, entire industries—forward. As you chart your AI learning path, embrace these statistical tools to refine your predictive analytics skills and be part of the evolving tech landscape.

For those excited to amplify their understanding of AI and predictive analytics, consider exploring further resources and engaging in communities where you can apply these concepts practically.

Add Row

Add Row  Add

Add

Write A Comment