Diving into SMOTE: A Key Technique in AI Learning

Artificial Intelligence (AI) is revolutionizing industries by leveraging advanced algorithms to enhance decision-making and automate processes. One noteworthy technique within AI learning is the Synthetic Minority Over-sampling Technique (SMOTE). Designed to address class-imbalance problems, SMOTE shows how simulation can create new insights from existing data.

Understanding the Mechanics of SMOTE

At its core, SMOTE is a method of oversampling rare events prior to executing machine learning algorithms. Class imbalance can skew the results of AI learning models, leading to poor performance. By generating synthetic data points, SMOTE helps to ensure that the AI algorithms learn from a more balanced dataset. The foundational idea is simple: use linear interpolation between existing data points to create synthetic observations. This is achieved through the equation Z = P + u*(Q-P), where u is a random uniform variate.

Creating Synthetic Data with SMOTE

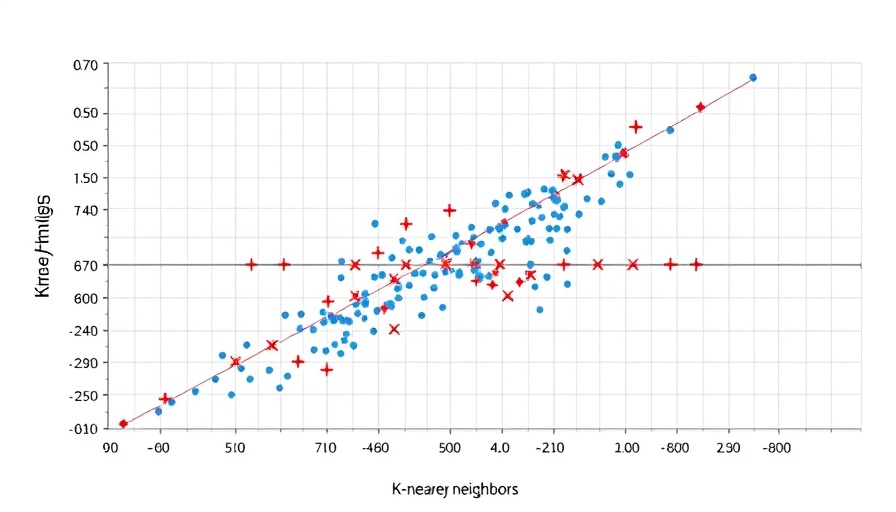

To visualize SMOTE, imagine plotting the data points on a graph. You randomly select a point, referred to as P, and then identify its nearest neighbors. From these neighbors, another point, Q, is chosen. A synthetic point is generated somewhere between P and Q on the line connecting them. This method allows the model to mimic real-world data distributions while addressing imbalanced class frequencies, enhancing the learning path in AI.

The Impact of SMOTE in AI Learning Paths

Implementing SMOTE is particularly beneficial for industries where occurrences of certain events are rare but critical—for instance, fraud detection or rare disease diagnosis. By integrating SMOTE, AI applications become significantly more effective, enabling better prediction accuracy and decision-making. As organizations increasingly harness the power of AI, the demand for knowledge around processes like SMOTE becomes indispensable.

Future Predictions: Expanding the Use of Synthetic Data

The use of techniques like SMOTE is expected to grow as AI technology advances. Future innovations might enhance the variability of synthetic data generation. For example, incorporating external variables and causal models could lead to even more realistic representations of minority classes. As AI becomes more ubiquitous in business impacts, understanding how to effectively use simulation methods like SMOTE will be a crucial part of the AI learning path.

Potential Challenges and Considerations

While SMOTE presents numerous advantages in addressing data imbalance, it’s important to recognize potential pitfalls. Generating synthetic data indiscriminately can lead to overfitting, reducing model generalizability. This makes it imperative for AI practitioners to apply SMOTE judiciously, carefully assessing the effectiveness of the generated data in relation to the task at hand. Furthermore, integrating SMOTE necessitates a robust understanding of data quality and relevance, minimizing risks associated with misleading results.

The rise of synthetic data generation techniques like SMOTE is transforming AI learning pathways, driving innovative solutions across numerous sectors. Those eager to expand their understanding of AI science should actively explore such methodologies, as they hold the potential to enhance decision-making processes significantly. Whether you’re in tech, healthcare, or finance, the implications of SMOTE are worth examining.

Conclusion: Embrace Synthetic Data for Successful AI Deployment

As businesses gear up to implement AI in their operations, understanding and leveraging techniques such as SMOTE can be pivotal. By addressing class imbalance and enhancing the richness of predictive models, organizations can unlock substantial value from their data. To further your journey in AI learning, consider implementing such techniques and exploring their impact on your specific sector. Embrace the future of AI with confidence, equipped with the knowledge from techniques like SMOTE!

Add Row

Add Row  Add

Add

Write A Comment