The Evolution of SAS Enterprise Guide: A Historical Overview

Since its inception in 1999, SAS Enterprise Guide has undergone significant transformations, aligning tightly with advancements in SAS technology and user needs. Looking at its version history offers a window into the evolution of data analysis tools, allowing us to appreciate how these updates have shaped the landscape of data science today.

Milestones in SAS Enterprise Guide Development

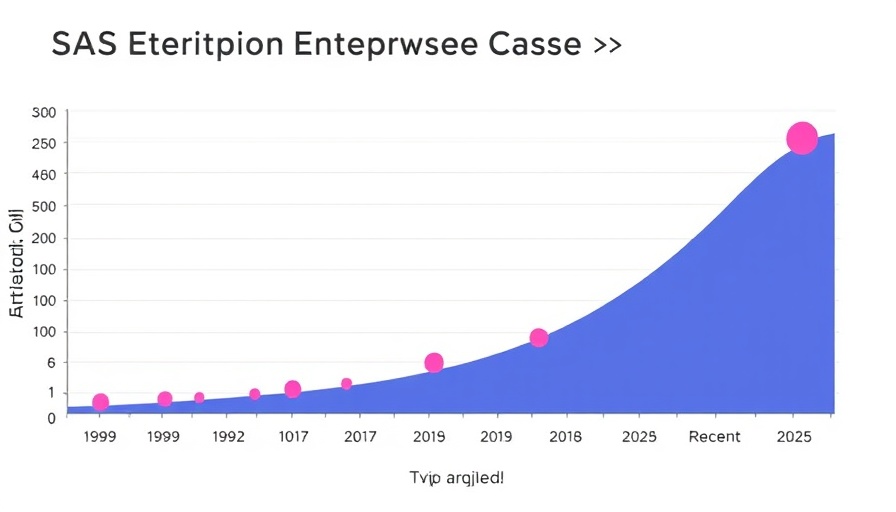

The timeline chart created by Chris Hemedinger illustrates pivotal releases that responded to both technological advances and user demands. For example, version 1.2 launched alongside SAS 8.2, marking a significant leap in user experience. Fast forward to recent iterations like version 8.5, which connected with SAS Viya 4, revealing SAS's commitment to integrating cutting-edge AI learning strategies within its software.

The Importance of Regular Updates and Features

In contrast to the core engine of SAS, SAS Enterprise Guide receives updates more frequently, honored by a myriad of releases over the years. Features such as multilingual support or updates for new operating systems demonstrate SAS's commitment to improving user experience across diverse environments. These enhancements not only improve functionality but also align with contemporary AI learning paths, making it easier for data scientists, especially those venturing into AI science, to utilize the tool effectively.

Understanding the Impact of SAS on AI Learning

SAS Enterprise Guide's ongoing enhancements provide a critical foundation for users engaging in AI learning. With each update, it supports more sophisticated analytics and data management techniques, thus empowering organizations to harness AI technologies. The software must equip users with intuitive interfaces and powerful capabilities as they navigate their AI learning paths, shaping how businesses leverage data-driven insights.

The Future of SAS Enterprise Guide and AI Integration

As we look ahead, the future of SAS Enterprise Guide appears promising, particularly within the context of AI integration. The recent connection to SAS Viya and the forthcoming developments hint at a push toward more AI-first capabilities, such as advanced machine learning algorithms and self-service analytics. It will be vital for organizations to remain updated with these technological trends and incorporate them into their strategies.

Conclusion: Why Understanding SAS Enterprise Guide Matters

For professionals interested in AI technologies and their applications, understanding the historical context and ongoing evolution of SAS Enterprise Guide is crucial. By learning how each version aligns with technological innovations, especially in AI, users can better adapt to leverage these tools. This knowledge can significantly enhance their strategies for navigating and employing AI science effectively.

Take Action: If you’re passionate about exploring AI learning pathways, dive deeper into SAS Enterprise Guide to unlock its potential for your projects. Embrace the technological advancements and harness them to propel your data analysis efforts to new heights.

Add Row

Add Row  Add

Add

Write A Comment